Design Conversational AI that Aligns with People's Values

You already know how to build great conversational AI. But what if you could build great conversational AI that your users and stakeholders feel represents them and their values?

The VSCA Framework

The VSCA Framework (Value-Sensitive Conversational Agent Framework) is here to help you and your team develop conversational agents (software that users interact with through conversation, such as chatbots, including those based on Large Language Models, voice assistants, and so on) that align with the values of all the stakeholders involved (also called value-sensitive conversational agents).

Stakeholder-Centered

Stakeholders are anyone with 'stake' in the conversational agent. I.e. anyone who is responsible for creating it, cares about it, is impacted by it, or benefits from it.

Value-Driven

Values are concepts that are important to people or that they believe in such as trust, autonomy, transparency, and privacy.

Inclusive Design

That means that your conversational agents will respect and uphold the values of the people directly and indirectly involved in creating it and are being affected by it (this includes your users of course!).

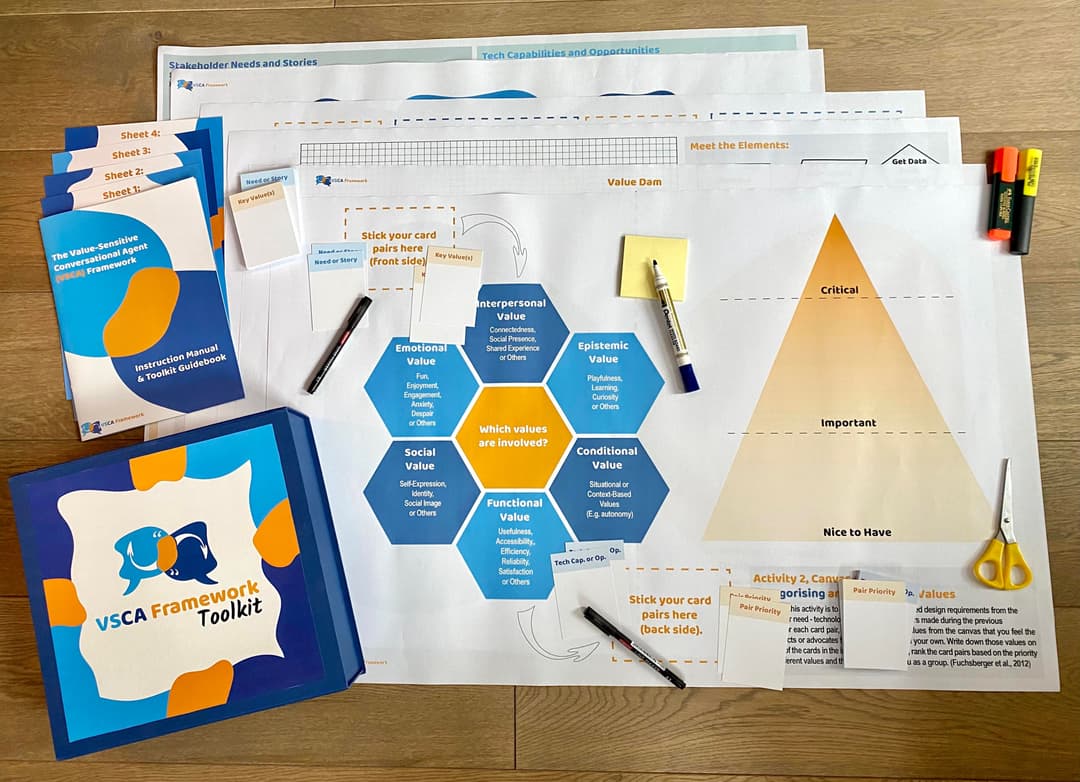

A Practical Toolkit

While a 'framework' sounds generic and abstract, what it really represents is a number of activities that you can build into existing design processes and workflows to adapt and centre them around stakeholders' values.

Ready-to-Use Tools

What you actually get out of this is a toolkit full of different tools and information on how to carry these steps out with different stakeholders practically. All you have to do is bring people together and follow the activities, without rebuilding the wheel from scratch or redefining how you and your team work.

Build Your Team's Capabilities

Not only does the framework and toolkit help with your current projects, it also helps train you and your team so that you can create human-centred and value-sensitive conversational AI more seamlessly going forward.

The best part? It actually works!

We gave the VSCA Framework & Toolkit to professionals building conversational AI to use. These people included conversation designers, chatbot developers, product managers, and everything in between. They also came from one-person teams in small start-ups all the way to working with massive teams in multinationals like Amazon.

In ALL cases:

Users felt that the prototypes professionals created were 50% better aligned with the intended values and 60% better aligned with their own values, compared to a control prototype.

Technical and non-technical professionals involved were able to use the outcomes directly in their workflows.

Users and other stakeholders involved were able to express their values and felt confident they were understood and incorporated.

Learn more about the VSCA Framework

Watch this video to hear from the creator about the VSCA Framework and Toolkit.

Here's what professionals said

Professionals who used the VSCA Framework & Toolkit share their experiences.

“We always talk about human centered design but never about working with human values.”

“Using it gives you a huge awareness and alertness for values… we had our value glasses on.”

“We could really go through and see which values we've considered and where, and then make changes as needed.”

“I'm confident the team is more aware of our values and of the things that they could do or not do to affect these values.”

“My values were really shining through during the whole process.”

“How AI makes you feel connected or isolated… this has completely reframed the way I look at AI.”

Ready to build value-sensitive conversational AI?

Get involved and start using the VSCA Framework & Toolkit today.